30

JanAzure Event Hub: A Comprehensive Guide

Azure Event Hub is a big data streaming platform and event ingestion service by Microsoft that enables real-time data processing of millions of events per second from various sources. For context,in today’s digital landscape, data is generated at lightning speed from countless sources apps, devices, websites, and more. To harness the power of this data in real-time, Microsoft offers a powerful solution, Azure Event Hub.

In this Azure tutorial, we will look into Azure Event Hub, a robust event streaming platform designed to handle massive amounts of data ingestion with high throughput and low latency. So let's get started.

What is Azure Event Hub?

Azure Event Hub is a fully managed, real-time data ingestion service offered by Microsoft Azure. As an event streaming platform, it enables seamless collection, transformation, and storage of event data from a wide range of sources such as IoT devices, applications, sensors, and cloud services.

If we talk about the overall overview of Azure Event Hub, Event Hubs are part of the Azure messaging ecosystem and are built for big data scenarios. It functions as a highly scalable data streaming platform and event ingestion service capable of receiving and processing millions of events per second. Once events are collected, they can be consumed by real-time analytics providers like Azure Stream Analytics and Apache Kafka or stored in data lakes for batch processing. Azure is powering 40% of global cloud infrastructure. Don’t get left behind—enroll in our Free Microsoft Azure Fundamentals Online Training today!

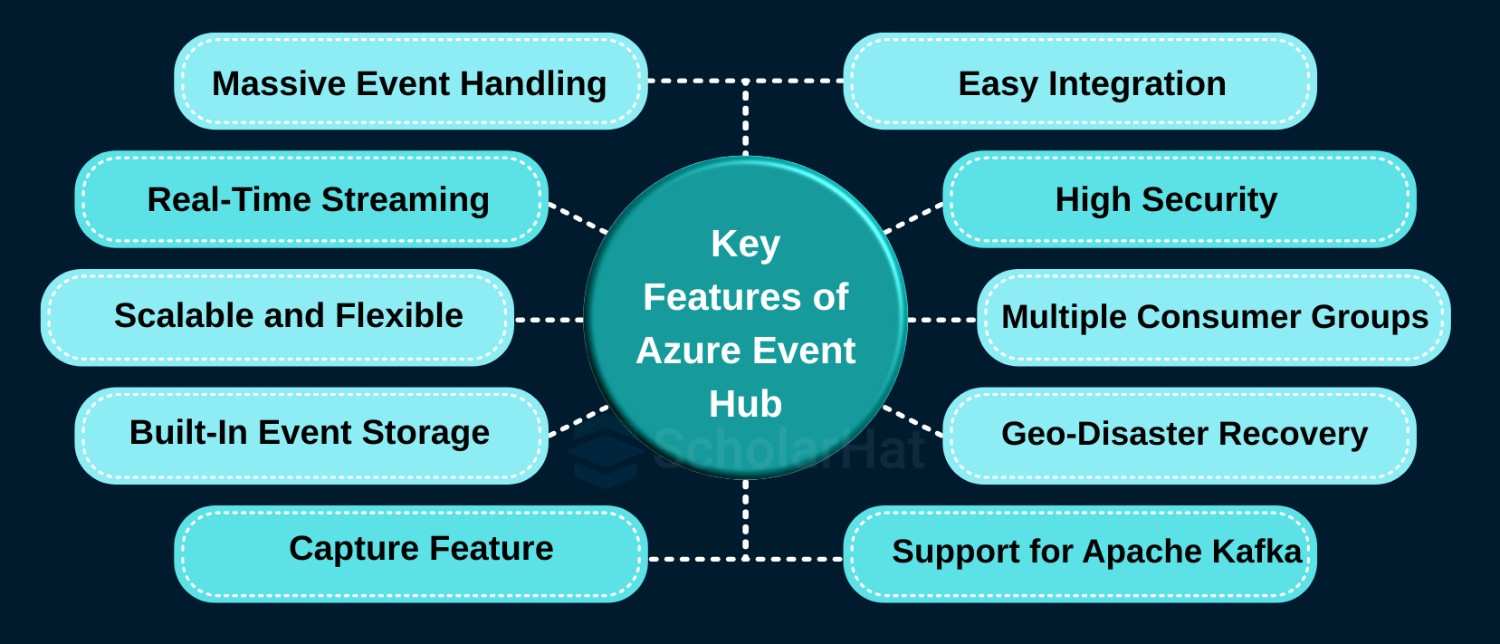

Key Features of Azure Event Hub

Now that you have a good idea of what Azure Event Hub is, let’s talk about its key features. Here we go:

1. Massive Scale for Event Ingestion

One of the coolest things about Azure Event Hub is that it can ingest millions of events per second. Whether you're tracking user clicks on a website, reading data from thousands of IoT devices, or streaming financial transactions, Event Hub can easily handle the heavy load without breaking a sweat.

Why it matters: You don’t have to worry about "what if my app becomes popular overnight?" Event Hub can grow with you, no problem!

2. Real-Time Processing

Data doesn't just sit there in Event Hub, you can actually process it in real-time.

You can connect Event Hub to services like:

- Azure Stream Analytics

- Azure Functions

- Apache Spark

- Your custom apps

Why it matters: You can make decisions as things happen, not after everything’s over.

3. Capture Feature (Automatic Data Storage)

Imagine if every event automatically got saved somewhere safe for you; that's what the Capture feature does. With Capture, Event Hub can automatically store your incoming event data into:

- Azure Blob Storage

- Azure Data Lake Storage

Why it matters: You can later use that data for deep analysis, reporting, or machine learning without any extra setup.

4. Partitioning for Better Performance

Here’s a little technical magic: Event Hub splits incoming data into partitions. Think of partitions like different lanes on a highway, each lane carries its own flow of cars (or events), so traffic moves faster and smoother.

Why it matters: Partitioning helps you process huge amounts of data efficiently and in parallel, without bottlenecks.

5. Multiple Consumer Groups

This feature is super useful: You can have different applications (or teams) read the same event data independently.

For example:

- One team analyzes user behavior.

- Another monitors system performance.

- Another uses the same data for fraud detection.

Why it matters: You can reuse your event data in multiple ways without duplicating anything.

6. Fully Managed Service

One of my personal favorite things, Azure Event Hub, is fully managed by Microsoft.

You don't have to worry about:

- Setting up servers

- Managing clusters

- Scaling manually

- Handling failures

Why it matters: You save huge amounts of time, effort, and operational headaches.

7. Event Replay Ability

Missed some events? No worries. Event Hub allows event replay by using its built-in storage system. You can go back and re-read old events if something went wrong or if you need to reprocess your data.

Why it matters: It's super helpful for debugging, audits, or building new features without affecting live systems.

8. High Security and Compliance

Since you’re dealing with important data, security is a big deal, right? Event Hub comes with:

- Encryption at rest and in transit

- Private network access via VNETs

- Role-based access control (RBAC)

- Managed Identity support

- Compliance with major certifications (GDPR, ISO, HIPAA, etc.)

Why it matters: You can sleep peacefully knowing your data is safe and you meet industry standards.

9. Kafka-Compatible Interface

If your company already uses Apache Kafka, good news: Azure Event Hub can act like a Kafka broker. Meaning, you can use your existing Kafka applications without changing much, but now you get the benefits of Azure’s scalability and management.

Why it matters: It saves huge migration costs and makes integration easier.

10. Geo-Disaster Recovery

Nobody wants downtime, especially during disasters. Azure Event Hub offers Geo-Disaster Recovery:

- You can pair your Event Hub with another one in a different region.

- If something goes wrong in one region, you can quickly switch to the secondary region.

Why it matters: Your business stays online even during major regional outages.

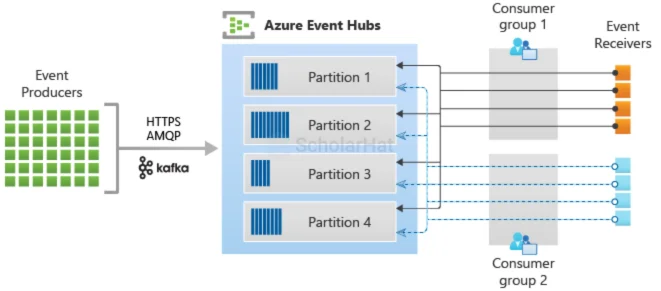

How Azure Event Hub Works

Azure Event Hub operates as a distributed event streaming platform with a sophisticated architecture designed for massive-scale data ingestion. Below is a comprehensive technical breakdown of its core components and data flow mechanisms.

1. Core Architectural Components

A. Namespaces

- A container for Event Hubs that provides DNS integration and isolation boundaries

- Defines the regional deployment and network security boundaries

- Contains shared access policies and encryption keys

B. Event Hubs (Entities)

- Primary event ingestion endpoints within a namespace

- Each can handle multiple partitions (up to 1,024 in the premium tier)

- Contains:

- Ingress endpoints (publishing interfaces)

- Consumer groups (independent consumption views)

- Retention policies (1-7 days default, extendable via Capture)

C. Partitions

- Ordered event sequences that enable parallel processing

- Each maintains its own:

- Offset numbering (position markers)

- Segment files (physical storage units)

- Index (for efficient time-based queries)

- Immutable append-only logs guarantee event ordering

2. Data Flow Mechanics

A. Event Ingestion Process

- Producer Authentication

- SAS tokens or Azure AD credentials validate publishers

- Optional IP filtering and VNet service endpoints

- Partition Routing

- Three routing strategies:

- Partition Key Hashing (consistent event-to-partition mapping)

- Round Robin (automatic load balancing)

- Direct Partition Assignment (explicit control)

- Three routing strategies:

- Batching & Compression

- Events grouped into batches (max 1MB each)

- Optional GZIP compression for high-volume scenarios

- Protocol-level batching in AMQP/Kafka clients

- Durability Commit

- Events are written to 3 storage replicas synchronously

- Acknowledgement sent after quorum replication

B. Storage Architecture

- Distributed Commit Log design:

- Segmented into 1GB blocks per partition

- Append-only writes for maximum throughput

- Time-based retention with background cleanup

- Hot/Cold Path Separation:

- Hot path: In-memory buffers for active partitions

- Cold path: Azure Storage backend for retention

C. Consumption Patterns

- Pull Model (AMQP/HTTP)

- Consumers maintain long-lived connections

- Prefetch buffers minimize latency

- Credit-based flow control

- Push Model (Event Grid Integration)

- Serverless triggers via Azure Functions

- Webhook notifications

- Checkpointing System

- Consumers persist partition cursors (offsets)

- Supports:

- Blob Storage-based checkpoints

- In-memory tracking for ephemeral workers

3. Advanced Processing Features

A. Exactly-Once Processing

- Idempotent Producers:

- Sequence numbers prevent duplicate ingestion

- Deduplication window (configurable)

- Transactional Outbox:

- Atomic writes across partitions

- Two-phase commit support

B. Capture Mechanism

- Time/Size Triggers

- Flushes data when either:

- Time threshold reached (default 5 min)

- Size threshold met (default 100MB)

- Flushes data when either:

- File Naming Convention{Namespace}/{EventHub}/{PartitionId}/{Year}/{Month}/{Day}/{Hour}/{Minute}/{Second}

- Metadata Files:.Index files enable efficient time-based queries and.avro schema files for type safety

C. Geo-DR Implementation

- Active/Passive Pairing:

- Aliases are abstract physical namespaces

- DNS failover triggers (manual or automated)

- Replication Latency:

- Typically < 15 seconds between regions

- Asynchronous replication model

4. Performance Optimization

Throughput Units (Standard Tier)

| TU Count | Ingress | Egress | Connections |

|---|---|---|---|

| 1 TU | 1 MB/s | 2 MB/s | 1,000 |

| 20 TU | 20 MB/s | 40 MB/s | 20,000 |

Processing Units (Premium Tier)

- Each PU provides:

- 10 MB/s ingress

- 20 MB/s egress

- 1000 connections

- 10 consumer groups

Optimal Partitioning

- Throughput Scaling:

- Each partition supports up to:

- 1 MB/s ingress

- 2 MB/s egress

- Each partition supports up to:

- Consumer Parallelism:

- Maximum consumers = partitions × consumer groups

5. Security Implementation

Encryption Layers

| Layer | Technology |

|---|---|

| Transit | TLS 1.2+ (AMQP/HTTPS) |

| At-Rest | Azure Storage Service Encryption |

| Client-Side | Customer-managed keys (CMK) |

Access Control Matrix

| Method | Granularity | Use Case |

|---|---|---|

| SAS Tokens | Per-entity, read/write | Device-level auth |

| Azure RBAC | Management plane control | Admin roles |

| Network ACLs | IP/VNet restrictions | Perimeter security |

| Private Link | Private endpoint connections | Hybrid cloud scenarios |

6. Monitoring & Diagnostics

Critical Metrics

- Throttled Requests: Indicates TU/PU limits reached

- Incoming Messages: Per-partition throughput

- Active Connections: Publisher/consumer count

- Capture Backlog: Delay in archival processing

7. Failure Handling Patterns

Producer Resilience

- Automatic Retries: Exponential backoff (default 3 attempts)

- Circuit Breakers: SDK-level fault detection

- Dead Lettering: Redirect failed events

Consumer Recovery

- Checkpoint Rollback: Rewind to last known good offset

- Replay Capability: Time-based event replay

- Consumer Group Isolation: Failures don't affect other groups

Architecture Delivers:

- 10 M+ events/sec throughput

- Sub-second end-to-end latency

- Zero data loss durability

- Enterprise-grade security compliance

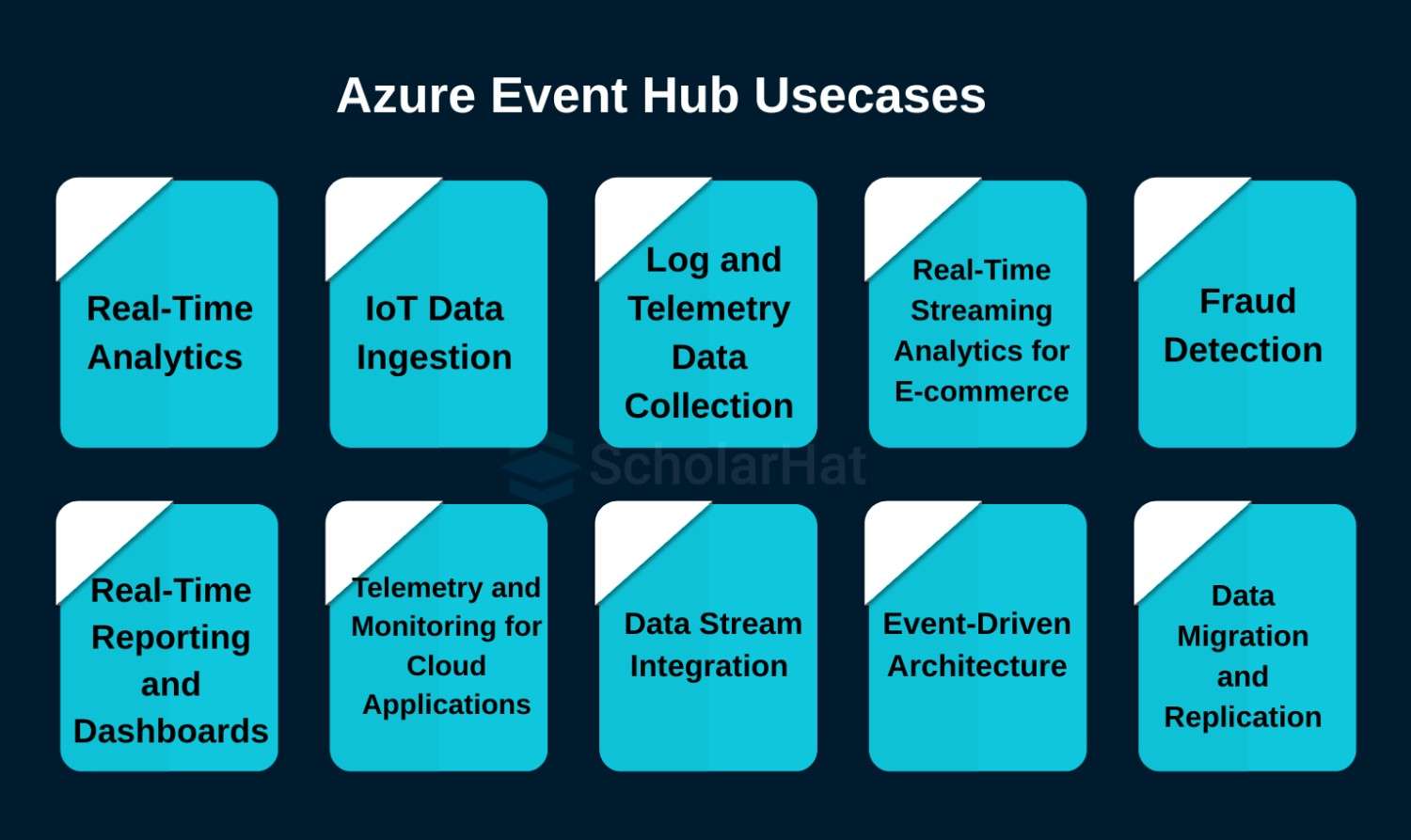

What is Azure Event Hub Used For?

Azure Event Hub is used for collecting and processing large volumes of streaming data in real-time. Common uses include:

- Telemetry ingestion from IoT devices

- Application event logging

- Fraud detection in financial systems

- Real-time analytics and dashboards

- Clickstream analysis for web analytics

Use Cases of Azure Event Hub

1. Real-Time Analytics

- Description: Azure Event Hub can collect real-time data from a wide range of sources, such as IoT devices, applications, or logs. Once the data is ingested, you can process it using Azure Stream Analytics or other data processing tools to generate real-time insights.

- Example: Monitoring social media feeds, weather data, or sensor data for immediate analysis and decision-making.

2. IoT Data Ingestion

- Description: Azure Event Hub is ideal for collecting data from IoT devices. The platform can handle millions of events per second, which makes it a perfect solution for IoT solutions that require high throughput and low latency.

- Example: Smart cities, industrial automation, and connected vehicles, where devices continuously stream telemetry data to be processed for further analysis.

3. Log and Telemetry Data Collection

- Description: Azure Event Hub can aggregate logs, telemetry, and application events from multiple sources like web servers, apps, and infrastructure. Once ingested, the data can be sent to other Azure services like Azure Monitor or Azure Log Analytics for analysis.

- Example: Collecting server logs, application logs, or custom telemetry data from your infrastructure to perform diagnostics, monitor health, and improve performance.

4. Real-Time Streaming Analytics for E-commerce

- Description: E-commerce platforms can use Azure Event Hub to stream customer interaction data, purchase histories, and real-time product inventory updates. This data can then be analyzed in real-time to enhance the customer experience or to trigger personalized marketing campaigns.

- Example: Personalized recommendations based on real-time shopping behavior or inventory management in a retail application.

5. Fraud Detection

- Description: By ingesting financial transactions or user behavior data in real-time, Event Hub can help organizations detect fraud as it happens. The data can be analyzed for unusual patterns or anomalies, triggering alerts for immediate investigation.

- Example: Analyzing credit card transactions in real-time to detect fraudulent activity based on user behavior patterns.

6. Real-Time Reporting and Dashboards

- Description: Data flowing through Event Hub can be processed by Azure Stream Analytics or other analytics tools to power real-time dashboards and reporting systems for business decision-making.

- Example: Sales dashboards that update in real-time as transactions occur or inventory dashboards showing live product stock levels.

7. Telemetry and Monitoring for Cloud Applications

- Description: For cloud-native applications, Event Hub can handle telemetry and monitoring data, providing real-time visibility into the health and performance of the application. It can aggregate data from various microservices and applications and send it to monitoring tools.

- Example: Monitoring the performance of cloud-based applications, logging errors, and tracking user activities.

8. Data Stream Integration

- Description: Azure Event Hub serves as a central hub for streaming data that can be integrated with other Azure services like Azure Functions, Azure Databricks, or Azure Synapse Analytics. This allows organizations to build end-to-end data pipelines for processing and analyzing large datasets.

- Example: Using Azure Functions to trigger specific actions based on the data received from Event Hub, such as sending an email notification when a threshold is crossed.

9. Event-Driven Architecture

- Description: Event Hub is perfect for implementing event-driven architectures, where different components of a system communicate via events. By using Event Hub, systems can remain loosely coupled and responsive to real-time changes in the environment.

- Example: A payment processing system where each transaction triggers various downstream processes like order fulfillment, customer notifications, and inventory updates.

10. Data Migration and Replication

- Description: Event Hub can be used to replicate data from on-premises systems to cloud systems or migrate large volumes of data between cloud environments.

- Example: Migrating data from legacy systems to Azure cloud-based solutions without interrupting ongoing operations.

11. Game Telemetry and Player Behavior Analytics

- Description: Game developers can use Azure Event Hub to stream telemetry data from online games to monitor player behavior, analyze game performance, and provide real-time insights.

- Example: Collecting player actions in real-time to adjust game dynamics or for detecting cheating behaviors.

Benefits of Using Azure Event Hub

- Real-time Processing: Enables businesses to act on data immediately.

- Scalability: Designed to handle high volumes of data with automatic scaling.

- Flexibility: Integrates easily with various Azure services and external tools.

- Reliability: Guarantees event delivery with at least once delivery model.

- Cost-Effective: Pay-as-you-go pricing based on throughput units and retention period.

Event Hubs vs. Event Grid vs. Service Bus

| Feature | Azure Event Hubs | Azure Event Grid | Azure Service Bus |

| Primary Use | Event streaming and telemetry ingestion | Event routing and notification | Reliable enterprise messaging |

| Message Volume | High (millions of events/sec) | Medium to low | Medium to high |

| Message Size | Up to 1 MB | Up to 1 MB | Up to 256 KB (Standard), 1 MB (Premium) |

| Message Retention | Up to 7 days | 24 hours | Configurable (up to days/months) |

| Message Ordering | Guaranteed via partitions | No guaranteed ordering | Guaranteed with sessions |

| Processing Pattern | Pull-based streaming | Push-based event notification | Queue or topic/subscription (pull-based) |

| Consumers | Multiple parallel consumers | One-to-many subscribers | One-to-one (queues) or many (topics) |

| Real-time Analytics Support | Yes (Stream Analytics, Spark, etc.) | No (used for reactive apps) | Not suitable for real-time analytics |

| Integration with Kafka | Kafka-compatible endpoint available | No Kafka support | No Kafka support |

| Ideal for IoT | Yes | Partially (alerts/notifications) | No |

| Built-in Dead-lettering | No | No | Yes |

| Retry Policies | Client-managed | Built-in automatic retries | Built-in retry and delivery control |

| Delivery Guarantees | At least once (best effort) | At least once | At least once, exactly-once (Premium) |

| Use Case Example | Streaming sensor data to the analytics engine | Triggering workflows after blob upload | Processing financial transactions with reliability |

| Best Fit For | Big data pipelines, telemetry, and real-time logs | Serverless event-driven apps | Order processing, enterprise workflows |

Conclusion

Azure Event Hub is a powerful and scalable solution for real-time data ingestion and streaming. Whether you're managing IoT telemetry, application logs, or financial transactions, Event Hub provides the infrastructure needed to process data efficiently and reliably. Its seamless integration with Azure services and open-source tools makes it a cornerstone for building modern, data-driven applications.

Cloud developers with Azure skills earn 30% more on average. Don’t miss out—join our Azure Developer Associate course today!

FAQs

- HTTPS

- AMQP (Advanced Message Queuing Protocol)

- Kafka protocol (Event Hub for Apache Kafka)

- Throughput Units (TU) or Capacity Units (for Premium/Dedicated)

- Data capture costs (if you enable Event Hub Capture)

- Ingress/Egress data volume

- Additional features like Geo-Disaster Recovery

Basic and Standard tiers are charged based on TU usage, while Premium and Dedicated tiers offer capacity-based pricing.

- Microsoft Learn's official Event Hub modules

- Scholarhat's Azure Tutorial.

Take our Azure skill challenge to evaluate yourself!

In less than 5 minutes, with our skill challenge, you can identify your knowledge gaps and strengths in a given skill.