13

FebTop 50+ Docker Interview Questions {Freshers + Experienced}

Docker Interview Question Answer 2024

Hey..! if you are preparing for Docker,then Docker interview questions and answers are a good way to practice and become a master in Docker. Also If you’re applying for jobs that involve managing and deploying applications, chances are you’ll be expected to know Docker.

Jobs like Software Developer, DevOps Engineer, Python Developer, Data Engineer, and many more all use Docker regularly. Google and Amazon use Docker as their go-to container technology. Docker is an open-source containerization platform used for building, deploying, and running applications. With the increasing adoption of Docker in the industry, software developers need to have a strong understanding of its concepts and practices

In this Docker tutorial, we will cover many topics, from the basics of Docker containers to more advanced concepts such as container orchestration, Docker Compose, and Docker networking.

Stand out from the crowd! Learn containers & DevOps with our Free Docker Course—certification included, at zero cost!

After going through these Docker interview questions and answers, you'll feel a sense of confidence to face the interviewers and crack the interview.

Basic Docker Interview Questions for Freshers

1. What is Docker?

Docker is a well-known containerization platform that packs your application and all its dependencies collectively in the structure of containers. As a result, it is guaranteed that your application will work flawlessly whether the environment is test, development, or production. The Docker container contains a piece of software in a whole file system. This system comprises all components essential for operation.

| What is Docker? |

2. Why is DevOps inevitable?

- Increased Pace of Software Delivery: DevOps practices enable teams to automate and streamline the software delivery process, reducing manual overhead and accelerating time-to-market for new features and updates.

- Enhanced Collaboration: DevOps fosters collaboration and communication between development, operations, and other cross-functional teams involved in the software delivery lifecycle.

- Improved Quality and Stability: DevOps practices such as continuous integration (CI) and continuous delivery (CD) enable teams to automate testing, deployment, and monitoring processes, resulting in higher-quality software with fewer defects and faster recovery from failures.

- Scalability and Flexibility: DevOps enables organizations to scale their software delivery pipelines to meet changing business demands and customer needs.

- Alignment with Business Objectives: DevOps practices focus on delivering value to customers and stakeholders by aligning development and operations activities with business objectives.

- Competitive Advantage: Organizations that embrace DevOps principles and practices gain a competitive advantage by being able to innovate faster, respond to market trends more effectively, and deliver superior customer experiences.

3. What are the key features of Docker?

- Application agility

- Developer productivity

- Easy modeling

- Operational efficiencies

- Placement and affinity

- Version control

4. What is Docker Container?

- A container is the fundamental software unit that stores the code as well as all of its dependencies.

- It assists in letting the application operate reliably, flawlessly, and speedily amongst the computing ecosystems.

- A Docker container is known as an executable package of the software which contains all components essential to execute an application.

- It contains libraries, system tools, runtime, code, and settings.

5. How to create a Docker container?

It involves several steps are as follows:

- Define a Dockerfile: The Dockerfile is a text file that contains instructions for building the Docker image. It specifies the base image, adds any necessary dependencies, and configures the environment for the application.

Example of Dockerfile

# Use the official Node.js image as the base image FROM node:14 # Set the working directory in the container WORKDIR /app # Copy package.json and package-lock.json to the working directory COPY package*.json ./ # Install dependencies RUN npm install # Copy the rest of the application code to the working directory COPY . . # Expose port 3000 EXPOSE 3000 # Command to run the application CMD ["node", "index.js"] - Build the Docker image: Use the docker build command to build the Docker image. Navigate to the directory containing your Dockerfile and run the following command:

docker build -t my-node-app. - Run the Docker container: Use the docker run command to run the container based on the image.

docker run -d -p 3000:3000 my-node-app

This command will build the Docker image using the instructions in the Dockerfile and tag it with the name my-node-app.

This command will run the container in detached mode (-d), meaning it will run in the background. The -p option maps port 3000 on the host to port 3000 in the container. The last argument specifies the name of the Docker image to use (my-node-app).

6. How is Docker unique from other container technologies?

Ease of Use

- Docker introduced a user-friendly interface and tools that simplified creating, managing, and deploying containers.

- Docker's CLI and GUI (Docker Desktop) make it easy for developers and operators to work with containers without in-depth knowledge of container internals.

Standardization

- Docker played a significant role in standardizing container technology and popularizing the use of container images and registries.

Portability

- Docker containers are highly portable and can run consistently across different operating systems and cloud environments.

- Docker is supported, from local development environments to production clusters.

Ecosystem

- Docker has built a rich ecosystem of tools, libraries, and services that complement its core container platform.

Community Support

- Docker has a large and active community of developers, contributors, and users who contribute to the ongoing development and improvement of the Docker platform.

Integration with Orchestration Tools

- Docker containers can be seamlessly integrated with popular container orchestration tools such as Kubernetes

- It enables organizations to deploy, manage, and scale containerized applications with ease.

| Kubernetes vs Docker: Analyzing The Differences |

7. What is Docker Hub?

- The Docker containers are created using Docker images.

- A registry is vital to have so that the Docker images become live and this registry is the Docker Hub.

- Docker Hub lets users choose images.

- It is also beneficial to make tailored images and containers.

- The way GitHub is used to provide a distributed file store (with version control), Docker hub allows you to push and pull images, access private repos that store Docker images, and auto-build Docker images from GitHub or BitBucket repositories, before pushing these to Docker Hub.

8. What is a Docker image?

- The docker image is nothing but the source of the Docker container.

- The Docker images are primarily useful for creating containers.

- With the build command, the images are created and they will generate a container when launched with run.

- It is observed that the Docker images are saved inside a Docker registry.

- The corresponding images are made up of layers of other images.

- So, the data loss is minimal during the transfer of images across the network.

9. Describe the components of Docker Architecture.

The three key components in Docker architecture are the host, client, and registry.

- Host- It stores the Docker Daemon, Containers, and Images. The Docker Daemon sets up a link with the Registry whereas the Docker Images works as metadata for the applications stored in the Docker Containers.

- Client- This component executes operations to establish communication with the Docker Host.

- Registry- It is useful to hold the Docker Images. Docker Cloud and Docker Hub are public registries that can be used by anybody.

10. What is the Docker Registry?

- The Docker Registry is the place where all Docker Images are saved.

- The Docker Hub is known as a public registry that works as the default storage for such images.

- The Docker Hub is the most considerable public registry of image containers.

- It is constantly maintained by a huge number of developers and several individual contributors.

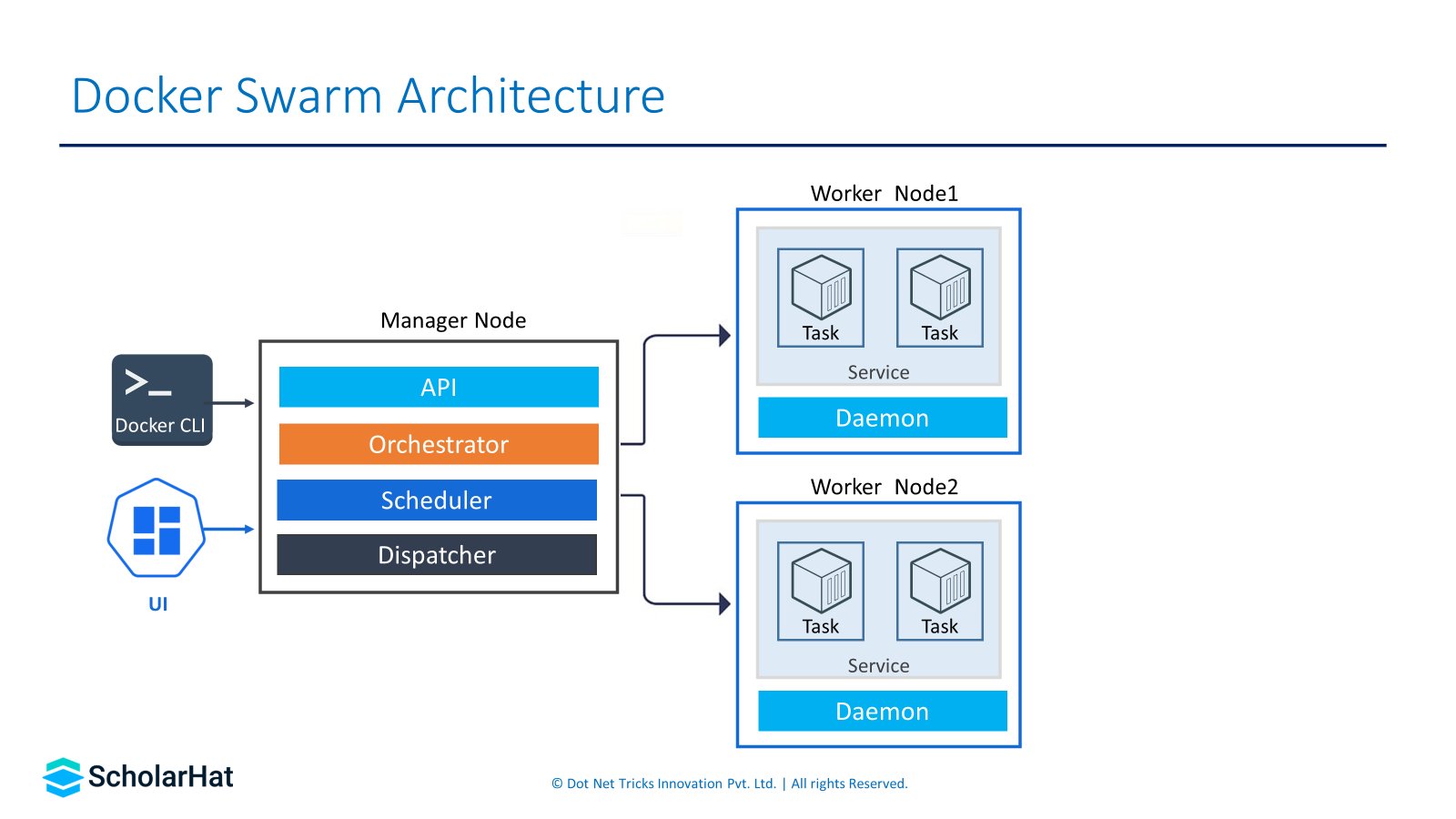

11. What is Docker Swarm?

- It is native clustering for Docker that transforms a group of Docker hosts into a single, practical Docker host.

- Those tools that already communicate with a Docker daemon can use Docker Swarm to level up to multiple hosts.

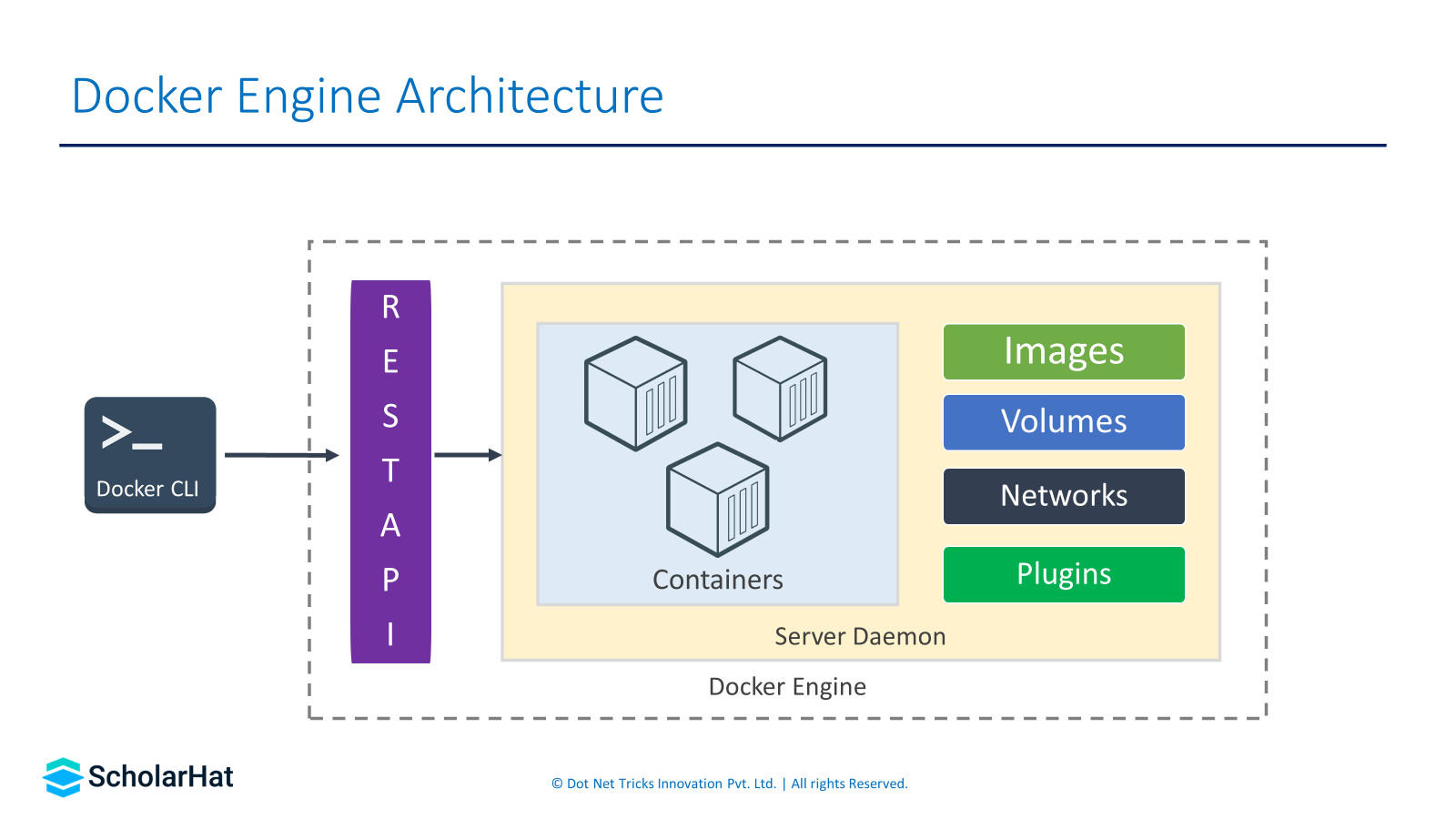

12. What is Docker Engine?

Docker Engine is an open-source containerization technology used to build and containerize our applications. Docker Engine is supported by the following three components:

- Docker Engine REST API

- Docker Command-Line Interface (CLI)

- Docker Daemon

13. What is a Docker Namespace?

The namespace includes a layer of isolation in Docker containers. Docker creates namespaces for containers once they have been started. To ensure portability, Docker provides diverse namespaces. It makes sure there is no adverse effect on the basic host system. Names of some of the namespace types which Docker supports are PID, IPC, Mount, User, and Network

14. What is Docker Compose?

It is a YAML file that stores details about the networks, services, and volumes for the creation of the Docker application.

15. What type of applications are more suitable for Docker Container: Stateless or Stateful?

- Stateless applications are better than Stateful applications for Docker Container.

- Stateless applications allow the creation of one container from the application you are using and extract the configurable state parameters of the app.

- After this is done, it is possible to execute the same container with various other production parameters and different environments.

16. Which networks are available as default in Docker?

The default networks available in Docker are bridge, none, and host.

- Bridge: Default network that containers will connect to if the network has not been otherwise specified

- None: Connects to a container-specific network stack that doesn’t have a network interface

- Host: Connects to the host’s network stack

17. Which command should be used to check all running containers in Docker?

You can check all the running containers using the below command

docker ps

It displays a summary of the running containers, including their container ID, image name, command, creation time, status, and ports.

18. Name the command to stop the docker container.

To stop a Docker container, use the docker stop command followed by the container ID or container name.

docker stop [OPTIONS] CONTAINER [CONTAINER...]

Replace [OPTIONS] with any additional options you may want to use (optional) and replace CONTAINER with the ID or name of the container you want to stop.

Example

docker stop abcd1234

19. Name the command to run the image as a container.

To run a Docker image as a container, use the docker run command.

docker run [OPTIONS] IMAGE [COMMAND] [ARG...]

Replace [OPTIONS] with any additional options you may want to use (optional), IMAGE with the name of the Docker image you want to run, [COMMAND] with the command to execute within the container (optional), and [ARG...] with any arguments to pass to the command (optional).

Example

docker run nginx

20. What are Docker object labels?

- Docker object labels are key-value pairs stored as strings.

- We can apply metadata using labels, which can be used for images, containers, volumes, networks, local daemons, swarm nodes, and services.

- Every object should have a unique label remaining static for an object’s entire lifetime.

Intermediate Docker Interview Questions

21. What are the common instructions in Dockerfile?

The common instructions present in Dockerfile are

- FROM: It allows you to create a base image. All the instructions executed after this command take place on this base image. It contains an image name and an optional tag name.

FROM <img name="">:<tag name=""></tag> - RUN: It is used to run specified commands.

Example

RUN apt−get −y install vim RUN apt−get −y update - CMD: It is used to set a command to be executed when running a container. There must be only one CMD in a Dockerfile. If it is more than one, only the last CMD takes effect.

Example

CMD ping scholarhat.com - ENTRYPOINT: This is used to configure and run a container as an executable. This specifies a command that will execute when the Docker container starts.

Example

ENTRYPOINT ping google.com - LABEL: It adds a description or metadata for a docker image. It's a key−value pair.

Example

LABEL description="Welcome to ScholarHat" - WORKDIR: It specifies your working directory inside the container.

Example

WORKDIR /usr/src/app - COPY: This instruction allows you to copy a directory from your local machine to the docker container.

Syntax

COPY <source>... <destination>Example

COPY index.html /var/www/html

22. What is Hypervisor?

The hypervisor manages the creation of the virtual environment on which the guest virtual machines run. Moreover, it monitors the guest systems and ascertains that the required resources are allocated to the guests. By allocating the necessary computational resources to each VM, it is possible to reduce physical hardware requirements and their maintenance

The two types of hypervisors are:

- Type I: This is a lightweight OS that is run on the host system

- Type II: This runs like any other piece of software within an existing OS

23. What is the difference between Docker Layer and Image?

| Parameters | Docker Layer | Docker Image |

| Composition | When you pull or build an image, Docker retrieves or creates each layer and combines them to form the complete image. | An image is composed of one or more layers. Each layer represents a filesystem diff that adds, modifies, or removes files from the previous layer. |

| Mutability | Layers are mutable during the Docker build process but become immutable once the image is built. | An image is immutable, meaning it cannot be changed or modified once it is created. |

| Sharing & Distribution | Layers are shared and reused across different images, which allows for efficient storage and bandwidth usage when pulling and pushing images to registries. | Images can be shared and distributed via container registries like Docker Hub, where they can be pulled and used by other users and systems. |

| Versioning | Each version of an image consists of a different set of layers, which enables you to track and manage changes to the image over time. | Images can be versioned using tags, allowing you to create multiple versions of the same image with different configurations or dependencies. |

24. How to supervise Docker in production?

- Monitoring:Implement monitoring solutions to collect and analyze metrics from Docker containers, hosts, and orchestration platforms. Use tools like Prometheus, Grafana, Datadog, or Sysdig to monitor CPU usage, memory utilization, network traffic, disk I/O, container health, and other key performance indicators (KPIs).

- Logging: Configure centralized logging to capture logs from Docker containers and services. Use logging drivers like Fluentd, Logstash, or Docker's native logging drivers (e.g., JSON-file, Syslog) to forward logs to a centralized logging system such as Elasticsearch, Splunk, or Graylog.

- Security: Implement security best practices to protect Docker environments from vulnerabilities, threats, and unauthorized access. Use Docker Bench for Security or other security scanning tools to assess Docker hosts and containers for security risks and compliance with security benchmarks.

- Performance Optimization: Optimize Docker container and host configurations for performance and scalability. Tune kernel parameters, allocate appropriate resources (CPU, memory, disk) to containers, and implement resource constraints and limits using Docker Compose or Kubernetes resource management features.

- High Availability: Deploy Docker containers and services in a high-availability configuration to ensure fault tolerance and resilience against failures. Use container orchestration platforms like Kubernetes, Docker Swarm, or Amazon ECS to manage containerized workloads across multiple nodes or clusters.

- Backup and Disaster Recovery: Implement backup and disaster recovery strategies to protect critical data and ensure business continuity. Use container snapshots, volume backups, and database replication techniques to create backups of Dockerized applications and data.

25. What is Virtualization?

Virtualization is a system of logically segmenting mainframes to allow multiple applications to execute at once. With virtualization, you can execute two different operating systems on the same hardware. In other words, it employs software (such as Hypervisor) to create a virtual version of a resource such as a server, data storage, or application.

There are three types of virtualization:

- Paravirtualization

- Emulation

- Container-based virtualization

26. What is CNM?

CNM is the abbreviation of Container Networking Model. It defines the steps for networking of containers, while also maintaining the abstraction used to support multiple network drivers. The three components are:

- Sandbox: It is an isolated network environment where containers are connected. Each container has its sandbox, which provides network isolation and allows containers to communicate with each other securely.

- Endpoint: Each container is associated with one or more endpoints, which define how the container is connected to the network and what network configuration it has (e.g., IP address, DNS settings, routing rules).

- Network: Networks define the connectivity rules and policies for containers within the same network.

27. How to establish communication between Docker Daemon and Docker client?

The communication between Docker Daemon and the Docker client can be established with the combination of socket.IO, Rest API, and TCP.

28. How to scale Docker containers?

- We can scale Docker containers to any specific level, reaching up to millions of containers.

- The necessary condition is that the containers require the OS and the memory throughout the operation.

- So, there must be no compromise in these requirements.

- The docker-compose command can be used to horizontally scale the number of Docker containers you require by starting the required number of additional instances.

Syntax

$] docker-compose --file docker-compose-run-srvr.yml scale =

In the above command, we are passing the docker-compose-run-srver.yml YAML file as the service name, and we must provide an integer value, ‘n’, to represent the number of additional instances we require to scale horizontally.

29. What are the various volume mount types accessible in Docker?

Three volume mount types are accessible in the Docker:

- Bind Mounts: Bind mounts allow you to mount a directory or file from the host machine into a container. With bind mounts, the files and directories on the host are directly accessible within the container.

Example

docker run -v /host/path:/container/path ... - Volumes: Docker volumes are managed by Docker itself and are stored in a location managed by Docker.

Example

docker run -v volume_name:/container/path ... - tmpfs mount: Stored in the host system's memory and they can never be written to the host's filesystem

30. Is there any limit on the number of containers running per host?

No, there can be any number of containers running per host, as you wish. Docker does not employ any limit but keeps in mind that every container demands memory, storage space, and CPU. Also, the application size needs to be considered.

31. What are the Docker alternatives?

Some of the alternatives to Docker are Kubernetes, Virtual Box, Open Container Initiative (OCI), Rancher, Vagrant, Apache Mesos, etc.

32. Is it possible to link containers in Docker?

Yes, it is done through the Container linking method of connecting containers in Docker. However, this method is not recommended for newer applications. Instead, it is recommended to use Docker networks to enable communication between containers.

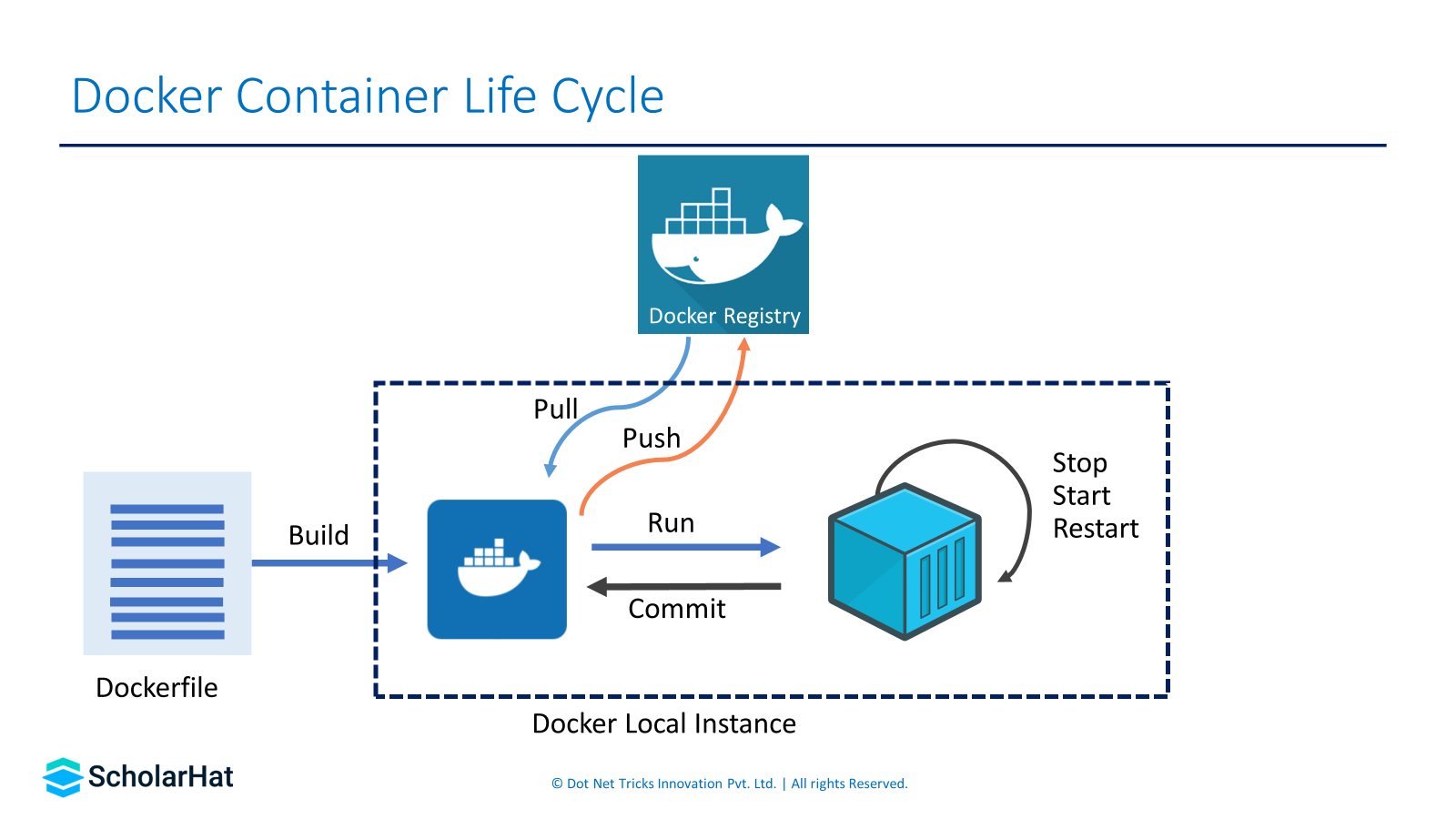

33. Explain the lifecycle of a Docker Container.

A docker container goes through the following stages in its lifecycle:

- Creating the container: Containers are created from Docker images using the docker run command or similar commands.

- Running the container: The container process starts running inside an isolated environment with its filesystem, network, and resources.

- Pausing the container: Pausing a container suspends all processes running inside it, effectively freezing its state.

- Unpausing the container: The paused container resumes executing the commands once it is unpaused.

- Starting the container: The docker start command will begin the container in the background.

- Stopping the container: In the stopped state, the container’s main process is shut down.

- Restarting the container: Restarting a docker container would translate to docker stop, then docker run, i.e., stop and run phases.

- Killing the container: The container’s main processes are shut down abruptly.

- Destroying the container: The container doesn't exist anymore, all the allocated resources are vanished.

34. How do you create a custom Docker network?

To create a custom Docker network, use the docker network create command followed by the desired network name.

Example

docker network create mynetwork

The above command creates a new custom network named "mynetwork" using the default bridge driver. You can also specify a different driver using the --driver option if needed.

35. What is Docker overlay networking?

- Docker overlay networking is a feature that allows multiple Docker hosts/nodes to communicate with each other across different physical or virtual networks.

- It enables containers running on different hosts to be part of the same virtual network even if they reside on separate physical networks.

- Overlay networking is a key component in Docker Swarm mode and is used to create a distributed network fabric that spans the Swarm cluster.

Advanced Docker Interview Questions for Experienced Professionals

36. What is container orchestration? Why is it required?

Docker container orchestration refers to managing and coordinating multiple containers in a distributed environment. It involves automating the deployment, scaling, scheduling, and monitoring of containers to ensure high availability, load balancing, fault tolerance, and efficient resource utilization.

Container orchestration platforms like Docker Swarm, Kubernetes, or HashiCorp Nomad provide tools and features to simplify the management of containerized applications at scale.

37. How to automate the deployment of Docker containers?

The deployment of Docker containers can be automated using the following tools and practices:

- Docker Compose: Define the application's services, networks, and volumes in a Compose file. Use the docker-compose command to deploy the containers with a single command.

- Docker Swarm or Kubernetes: Utilize container orchestration platforms to define and deploy the application as a service or a set of pods/replicas. This allows automatic scaling, load balancing, and self-healing capabilities.

- Continuous integration/continuous deployment (CI/CD) pipelines: Integrate Docker into CI/CD workflows using tools like Jenkins, GitLab CI/CD, or CircleCI to automatically build, test, and deploy Docker containers based on code changes.

- Infrastructure-as-code (IaC): Use tools like Terraform or AWS CloudFormation to define the infrastructure stack, including Docker hosts/clusters, networks, and storage, to enable automated provisioning and container deployment.

| Building CI/CD Pipeline |

38. How many containers can run per host?

There can be as many containers as you want to run per host. Just remember that every container needs storage space, CPU, and memory which the hardware needs to support. You also need to consider the application size. Containers are considered to be lightweight but very dependent on the host OS.

39. What role does Docker API play in container management?

The Docker API provides a programmatic interface to interact with the Docker daemon and manage containers, images, networks, and other Docker resources. It allows developers and system administrators to automate container-related tasks. These include creating and managing containers, pulling and pushing images, inspecting container status, and interacting with Docker Swarm or other orchestration platforms.

40. What is Docker Machine and how is it used?

Docker Machine is a command-line tool that simplifies the process of provisioning and managing Docker hosts. It automates the creation of virtual machines and cloud instances and installs Docker on them.

It supports various platforms like local hypervisors, cloud providers, and remote Docker hosts. It allows developers to easily create Docker hosts with different configurations such as specifying CPU, memory, storage, and networking settings.

Docker Machine is particularly useful for local development environments, setting up test clusters, and deploying Docker on different infrastructure providers.

41. What are the areas where Docker is being used?

Docker is being used in the following areas:

- Software Development: Docker is used in software development workflows to build, ship, and run applications consistently across different environments.

- Continuous Integration/Continuous Deployment (CI/CD): Docker enables automated testing, building, and deployment of applications.

- Microservices Architecture: Docker is used in microservices architecture to package and deploy individual services as lightweight, isolated containers.

- Cloud Computing: Cloud providers offer Docker-based services like AWS ECS, Azure Container Instances, and Google Kubernetes Engine (GKE) to simplify container deployment and management in the cloud.

- DevOps Practices: Docker is a key enabler of DevOps practices, allowing organizations to automate infrastructure provisioning, configuration management, and application deployment.

- Container Orchestration: Docker is used in conjunction with container orchestration platforms like Kubernetes, Docker Swarm, and Apache Mesos to automate the deployment, scaling, and management of containerized applications.

- Serverless Computing: Docker is used in serverless computing platforms like AWS Lambda, Azure Functions, and Google Cloud Run to package and deploy serverless functions as containers.

- Edge Computing: Docker is increasingly used in edge computing environments to deploy and manage applications at the network edge.

42. What is the purpose of the Docker plugin system?

The Docker plugin system allows extending Docker's functionality by integrating third-party plugins. Plugins can provide additional capabilities such as storage drivers, network drivers, authorization and authentication mechanisms, and volume drivers, etc.

The plugin system enables Docker to be highly extensible, allowing users to tailor Docker to their specific requirements or integrate it with existing infrastructure and tools. Plugins are developed using the Docker Plugin API and can be distributed and managed independently from the core Docker product.

43. How to manage Docker images in a private registry?

- Set up a private Docker registry using tools like Docker Registry or third-party solutions like Harbor or Nexus Repository Manager

- Push Docker images to the private registry using the docker push command and specify the registry's address and credentials

- Pull Docker images from the private registry using the docker pull command and provide the image's name and registry information

- Manage access and permissions to the private registry by configuring authentication and authorization settings

- Apply image retention and deletion policies to manage storage usage and keep the registry organized

- Monitor the private registry for storage capacity, performance, and security.

44. Why is the "docker system prune" command used?

The docker system prune command is used to clean up unused Docker resources including stopped containers, unused networks, dangling images, and unused volumes. It helps reclaim disk space and improve system performance by removing resources no longer needed.

45. What is Docker's support for GPU acceleration?

- Docker provides support for GPU acceleration through the use of NVIDIA Docker.

- NVIDIA Docker is a toolkit that extends Docker's capabilities to work seamlessly with NVIDIA GPUs.

- It allows containers to access and utilize the GPU resources available on the host system, enabling GPU-accelerated computations within Docker containers.

- This is particularly useful for applications that require high-performance computing, machine learning, and deep learning tasks that can benefit from GPU processing power.

46. How does Docker Swarm help in load balancing?

- Load balancing in Docker Swarm can be achieved by using the built-in load balancing feature.

- When a service is deployed in a Docker Swarm cluster, multiple containers are created to run the service.

- The Swarm's load balancer automatically distributes incoming requests across the available containers running the service, ensuring that the load is evenly distributed.

- This provides high availability and scalability for the application.

47. Can you remove a paused container from Docker? Can a container restart by itself?

- No, we cannot remove a paused container.

- The container has to be in the stopped state before it can be removed.

- Also, a container can’t restart by itself. By default the flag -restart is set to false.

48. What are the various methods to monitor Docker containers?

- Using Docker's built-in container monitoring commands, like docker stats and docker container stats, to view resource usage statistics.

- Integrating with container monitoring and logging tools like Prometheus, Grafana, or ELK stack (Elasticsearch, Logstash, Kibana) to collect and analyze container metrics and logs.

- Leveraging container orchestration platforms that offer built-in monitoring capabilities such as Docker Swarm's service metrics or Kubernetes' metrics API.

- Using specialized monitoring agents or tools that provide container-level insights and integration with broader monitoring and alerting systems.

49. What is the process for troubleshooting issues with Docker containers?

Troubleshooting Docker containers involves several steps:

- Check the container's logs for any error messages or abnormal behavior using the docker logs command.

- Inspect the container's metadata and runtime details with commands like docker inspect or docker stats.

- Verify the container's resource allocation and constraints such as CPU and memory limits.

- Check the host system's logs for any related issues or resource constraints.

- If networking issues are suspected, examine the container's network configuration and connectivity.

- Make sure that the Docker daemon and related services are running correctly.

- If necessary, recreate or redeploy the container to rule out any configuration or state-related issues.

50. Is it possible for the cloud to overtake the use of Containerization?

It's unlikely that the cloud will completely overtake the use of containerization, as both technologies serve different purposes and can complement each other in many scenarios.

51. What are the different states a Docker container can be in?

Multiple states are applicable to Docker containers:

- Created: The container has been created, but it hasn't been started.

- Running: At the moment, the container is operating.

- Paused: A temporary halt is applied to the container's operations.

- Stopped: The container still exists but is not currently operating.

- Dead: There is no way to recover from a container that failed during startup or execution.

52. What is the difference between CMD and ENTRYPOINT in a Dockerfile?

- CMD: Offers ENTRYPOINT default arguments, which can be changed when executing the container.

- ENTRYPOINT: Sets up a container to function as an executable, without the --entrypoint flag, the command cannot be changed.

53. What is the use of the .dockerignore file?

54. What is a Docker volume and how is it different from a bind mount?

- Volumes: Suitable for data exchange across containers, volumes are managed by Docker and kept in its storage directory.

- Bind Mounts: For development and debugging purposes, directly mount a file or directory from the host filesystem into a container.

55. How does Docker handle networking?

- Bridge: On the same host, containers can connect using the default network.

- Host: Eliminates network separation between the Docker host and the container.

- Overlay: Facilitates communication between Docker hosts' containers.

- Macvlan: Gives every container a MAC address so it may communicate directly with the physical network.

- None: Turns off a container's networking.

56. What is Docker swarm mode and how is it different from Docker Compose?

57. How can you secure a Docker container?

- Use reputable and official images.

- Use the least privilege concept by launching containers as non-root users.

- Update container and Docker images frequently.

- Make use of the AppArmor, SELinux, and seccomp security features offered by Docker.

- Put firewall rules and network segmentation into practice.

- Keep an eye on and check container activities.

58. How do you troubleshoot a failing Docker container?

- You can use docker logs [container_id] to examine container logs to debug a malfunctioning Docker container.

- Use docker inspect [container_id] to get container details.

- Docker exec -it [container_id] /bin/bash can be used to access the file system inside the container.

- Examine the Docker daemon logs.

- Track resource use and container performance with monitoring tools.

59. What is the purpose of Docker Compose’s depends_on option?

60. What is Docker Secret and how is it used?

Sensitive information, like certificates, API keys, and passwords, can be safely managed with Docker Secret. Services running within a Docker Swarm can access encrypted Docker Secrets. Docker Secrets usage:

- Use docker secret create [secret_name] [file] to create a secret.

- Make a reference to the secret in your service definition or Docker Compose file.

- Using the path /run/secrets/[secret_name], you can access the secret in your container.

Summary

In this Docker tutorial, we have covered all the important Docker interview questions and answers. You may easily demonstrate your experience and preparation to take on difficulties in a Docker-centric career by thoroughly preparing with these Docker interviewquestions and answers.

| Download this PDF Now - Docker Interview Questions and Answers PDF By ScholarHat |

Crack Your Next Docker Interview – Grab the Free Expert eBook!

Crack Your Next Docker Interview – Grab the Free Expert eBook!ASP.NET MVC Interview Questions and Answers Bookers Book Unlock expert-level Docker interview preparation with our exclusive eBook! Get instant access to a curated collection of real-world interview questions, detailed Answers Bookers, and professional insights — all designed to help you succeed.

No downloads needed — just quick, free access to the ultimate guide for Docker interviews. Start your preparation today and move one step closer to your dream job!.

FAQs

- What is Docker, and how does it differ from virtual machines?

- Explain the architecture of Docker.

- What is a Docker container?

- What is a Docker image, and how is it created?

- How do Docker containers isolate applications?

- How do you start, stop, and restart a Docker container?

- How can you list all running containers?

- What is the difference between docker run and docker start?

- How do you remove a Docker container and image?

- How do you inspect a Docker container?

- What is a Dockerfile, and how do you create one?

- Explain the different instructions used in a Dockerfile.

- How do you optimize a Dockerfile for better performance?

- What is a multi-stage build in Docker?

- How do you build and tag a Docker image?

- How does Docker handle networking?

- What is the difference between bridge and host networking?

- How do you expose ports in Docker?

- How do you create and use Docker volumes?

- What are bind mounts and named volumes?

- What is Docker Compose, and why is it used?

- How do you define services in a docker-compose.yml file?

- How do you scale services using Docker Compose?

- How do you use environment variables in Docker Compose?

- Explain the process of building and running a multi-container application with Docker Compose.

- What is Docker Swarm, and how does it work?

- How does Kubernetes relate to Docker?

- Explain the concept of microservices and how Docker facilitates microservices architecture.

- How do you handle logging and monitoring for Docker containers?

- What are some best practices for securing Docker containers

- How would you migrate a traditional application to Docker?

- Describe a situation where Docker helped solve a complex deployment issue.

- How do you handle persistent storage in Docker for stateful applications?

- Explain a challenging problem you faced with Docker and how you resolved it.

- How would you set up a CI/CD pipeline with Docker?

- How do you troubleshoot a failing Docker container?

- What steps would you take if a Docker container is consuming too much memory?

- How do you optimize Docker image size?

- What are some common issues with Docker networking, and how do you resolve them?

- How do you manage and clean up unused Docker resources?

- Experience Required: 0-1 year

- Expectations:

- Basic understanding of Docker concepts.

- Ability to create and run simple Docker containers.

- Familiarity with Docker commands and basic Dockerfile creation.

- Some exposure to Docker Compose.

- Experience Required: 1-3 years

- Expectations:

- Proficiency in creating and managing Docker containers and images.

- Experience with Docker Compose for multi-container applications.

- Ability to optimize Dockerfiles and troubleshoot common Docker issues.

- Understanding of Docker networking and storage solutions.

- Exposure to CI/CD pipelines with Docker.

- Experience Required: 3-5 years

- Expectations:

- Advanced knowledge of Docker architecture and best practices.

- Expertise in optimizing and securing Docker containers.

- Experience with orchestration tools like Docker Swarm and Kubernetes.

- Ability to design and implement complex multi-container applications.

- Proficiency in integrating Docker into CI/CD pipelines.

- Experience with monitoring and logging for Dockerized applications.

- Experience Required: 2-5 years

- Expectations:

- Deep understanding of Docker and containerization principles.

- Extensive experience with Docker Compose, Docker Swarm, and Kubernetes.

- Ability to automate the deployment and scaling of applications using Docker.

- Expertise in integrating Docker with various CI/CD tools and workflows.

- Knowledge of container security and compliance.

- Proficiency in troubleshooting and performance tuning of Docker environments.

- Experience Required: 1-3 years (with Docker, more with general system administration)

- Expectations:

- Solid understanding of Docker installation, configuration, and maintenance.

- Ability to manage Docker networks and storage.

- Experience with monitoring Docker containers and resource management.

- Basic scripting skills for automating Docker tasks.

- Familiarity with backup and recovery of Docker containers and volumes.

- Experience Required: 2-4 years (with Docker, more with cloud platforms)

- Expectations:

- Proficiency in deploying Docker containers in cloud environments (AWS, Azure, GCP).

- Experience with container orchestration in the cloud.

- Understanding of cloud-native applications and microservices architecture.

- Knowledge of integrating Docker with cloud services and tools.

- Ability to manage security, scalability, and performance of Dockerized applications in the cloud.

- Experience Required: 5+ years

- Expectations:

- Extensive experience with Docker and containerization strategies.

- Ability to design and oversee the implementation of containerized solutions.

- Expertise in integrating Docker with various enterprise systems and workflows.

- Strong understanding of microservices architecture and cloud-native principles.

- Experience in mentoring and guiding teams in best practices for Docker usage.

- Knowledge of emerging trends and technologies in containerization.

The employee should have an understanding of Docker architecture, images, containers, and registries.

Also, he is Proficient in using Docker CLI commands to manage containers, images, volumes, and networks.

2. Problem-Solving mind

He should have the ability to diagnose and resolve issues related to Docker containers, such as performance bottlenecks, networking issues, and application failures.

3. DevOps and Automation

Employees also be experienced in integrating Docker with continuous integration and continuous deployment (CI/CD) pipelines.

He could be Proficient in automating the deployment, scaling, and management of Docker containers using scripts and tools.

5. Collaboration and Communication

He should have the ability to work effectively in cross-functional teams, collaborating with developers, system administrators, and other stakeholders.

Also, he should have Clear and effective communication skills to explain technical concepts to non-technical team members and stakeholders.

6. Adaptability and Continuous Learning

He must willing to learn about new Docker features, updates, and best practices.

He should have the Ability to adapt to changing technologies, tools, and processes in the fast-evolving containerization landscape.

- Projects:

- Open Source Contributions:

- Docker Certified Associate (DCA)

- Blog Posts

- Documentation

- Meetups and Conferences:

- Workshops

- Sample Projects

- Explain core Concepts

- Architecture Diagrams

- Practical Scenarios

- Container Isolation: Know how Docker uses namespaces and groups to isolate containers from each other and the host system.

- Least Privilege Principle: Understand why containers should run with the least privileges necessary and avoid running containers as the root user.

- Image Vulnerabilities: Be aware that using untrusted or outdated images can introduce vulnerabilities.

- Misconfigured Dockerfiles: Understand the implications of improper Dockerfile configurations, such as using ADD instead of COPY or including sensitive data.

- Network Exposure: Know the risks of exposing container services to the public internet.

- Use Official and Verified Images: Always use official or verified images from trusted sources.

- Regularly Update Images: Keep images up to date with the latest security patches.

- Minimize Image Size: Use minimal base images to reduce the attack surface, such as alpine or scratch.

- Implement Multi-Stage Builds: Use multi-stage builds to create lean and secure final images.

- Scan Images for Vulnerabilities: Use tools like Docker Bench for Security, Clair, or Trivy to scan images for known vulnerabilities.

- Run as Non-Root: Ensure that containers do not run as the root user by specifying a non-root user in the Dockerfile.

- Limit Container Capabilities: Use the --cap-drop and --cap-add flags to restrict container capabilities to the minimum necessary.

- Use Read-Only Filesystems: Make container filesystems read-only whenever possible by using the --read-only flag.

- Use Secrets Management: Store and manage sensitive information using Docker secrets instead of hardcoding them in Dockerfiles or environment variables.

- Isolate Containers: Use user-defined bridge networks to isolate containers and control communication.

- Limit Published Ports: Only expose necessary ports and avoid using the --network host option unless absolutely necessary.

- Use TLS for Communication: Secure the Docker daemon and client communication with TLS.

- Resource Limits: Use resource limits to control CPU and memory usage (--memory and --cpus flags) to prevent DoS attacks.

- Logging and Monitoring: Implement logging and monitoring for container activities using tools like Docker logging drivers, ELK stack, or Prometheus.

Take our Docker skill challenge to evaluate yourself!

In less than 5 minutes, with our skill challenge, you can identify your knowledge gaps and strengths in a given skill.