27

FebFeature Selection Techniques in Machine Learning

Introduction

In the discipline of machine learning, feature selection techniques are essential because they make it possible to find and choose the most useful and pertinent features from a given dataset. The process of developing a model now requires feature selection since vast and complicated datasets are becoming more and more readily available. By removing irrelevant, redundant, or noisy features from the dataset, feature selection aims to minimize the dataset's dimensionality. This improves model performance, lowers overfitting, and increases interpretability.

The choice of relevant features is essential in machine learning for a number of reasons. First, because there are so many characteristics in high-dimensional datasets, the model may have trouble generalizing well because of the sparseness of the data and the resulting complexity. The dimensionality of the dataset can be decreased by choosing pertinent characteristics, leading to models that are more effective and precise.

Techniques for feature selection can also aid in preventing overfitting. The model may catch noise or unimportant patterns in the data if it is trained on too many characteristics, which will hinder its ability to generalize to new situations. The model gets less complex by removing extraneous features, lowering the possibility of overfitting, and enhancing its capacity to generalize to new data.

The enhancement of interpretability is another benefit of feature selection. Fewer characteristics make it simpler to comprehend the connections and trends in the data. This is particularly significant in fields like healthcare, banking, or legal applications where interpretability is essential. Data scientists & domain experts can get important insights and make wise judgments based on the model's output by choosing the most pertinent attributes.

Filter techniques, wrapper methods, embedding methods, & dimensionality reduction techniques are some of the available feature selection strategies. While wrapper approaches analyze subsets of data utilizing particular machine-learning models, filter methods evaluate the relevance of features based on their statistical qualities. Dimensionality reduction strategies convert the high-dimensional feature space into a lower-dimensional representation, whereas embedded methods integrate feature selection with the model construction process.

Feature selection

- In the context of machine learning, the process of choosing a smaller subset of pertinent features from a larger collection of available characteristics in a dataset is referred to as feature selection. It seeks to identify the most discriminative and informative characteristics that increase a machine learning model's predictive potential while eliminating unnecessary or redundant features.

- By lowering the dimensionality of the dataset, feature selection aims to enhance the performance of a machine learning model. Large feature sets in high-dimensional datasets can pose a variety of problems. They can raise the likelihood of overfitting when the model memorizes noise or misleading patterns rather than learning useful associations in the data, and they can increase the computing complexity, the amount of data needed for training, and the requirement for more data.

- Feature selection assists in addressing these issues and improving model performance in a variety of ways by choosing the most pertinent features. By concentrating on the most relevant features, it first minimizes the complexity of the model, making it simpler to recognize and extrapolate patterns from the data. Second, by requiring less memory and data processing, it increases computing efficiency. Thirdly, it reduces the danger of overfitting by deleting distracting or pointless characteristics that could add needless complexity.

Feature selection in machine learning

The act of choosing the most pertinent and instructive features from a broader pool of available information is known as feature selection in machine learning. It seeks to boost model performance, decrease complexity, and increase interpretability by choosing a subset of characteristics. This choice aids in concentrating the model's attention on the crucial elements of the data, improving prediction accuracy, reducing overfitting, accelerating computation, and making model interpretation simpler. To evaluate feature significance and optimize the subset selection, methods including filter methods, wrapper methods, and embedding methods are used.

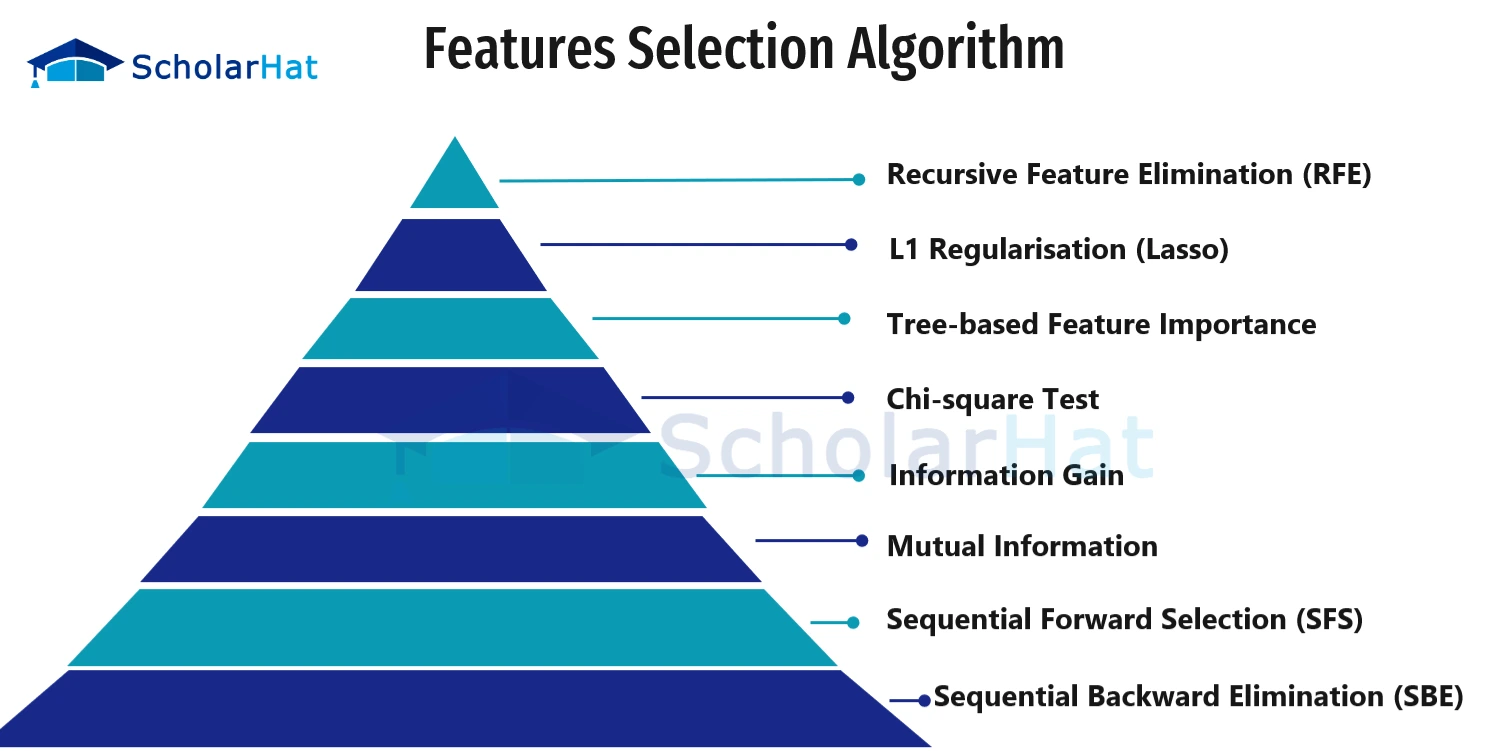

Features Selection Algorithms

Numerous feature selection techniques are frequently employed in machine learning. With the help of these algorithms, the most important features in a given dataset are to be found and chosen. The following list of well-liked feature selection algorithms:

- Recursive Feature Elimination (RFE): RFE is a feature selection approach based on wrappers that recursively eliminate features by training a model on the entire feature set and eliminating the features that are the least essential according to their weights or significance scores. It gradually eliminates features up until it reaches a predetermined number or a stopping criterion.

- L1 Regularisation (Lasso): L1 regularisation is an embedded choice of features approach that modifies the objective function of the model by including a penalty term depending on the absolute value of the feature coefficients. Because of this, it promotes sparse solutions and has a propensity to make unnecessary feature coefficients zero, achieving successful feature selection.

- Tree-based Feature Importance: Based on how frequently a feature is used to divide the data across several trees, tree-based algorithms, such as Random Forest and Gradient Boosting, can calculate the importance of a given feature. Features are deemed more pertinent when they receive higher significance scores. The selection of features may be based on these scores.

- Chi-square Test: For category features, the Chi-square test is a filter-based approach to feature selection. By contrasting the observed frequencies with the predicted frequencies, it evaluates the independence between a feature and the target variable. Chi-square statistics beyond a certain threshold are regarded as more significant features.

- Information Gain: When a feature is known, Information Gain—another filter-based technique—measures the reduction in entropy or uncertainty in the target variable to determine whether the feature is relevant. In order to learn more about the target variable, features with better information gain are chosen.

- Mutual Information: This filter-based approach estimates the amount of knowledge that one characteristic contributes to the target variable. The dependence between two variables is quantified. A greater link between the feature and the target is indicated by higher mutual information, which increases relevance.

- Sequential Forward Selection (SFS): SFS is a wrapper-based method that begins with a feature set that is empty and adds each feature one at a time, choosing the feature that enhances model performance the greatest. It builds a feature subset incrementally up to the point where the target number of features is reached or the performance increase falls below a predetermined threshold.

- Sequential Backward Elimination (SBE): SBE is a wrapper-based technique that starts with all features and removes one at a time, choosing the one that has the least negative impact on the performance of the model. It gradually eliminates features up until a predetermined quantity is reached or the performance declines beyond a predetermined threshold.

Feature selection techniques in machine learning

Feature selection techniques in machine learning can be accomplished using a variety of strategies, each with distinct advantages and goals. Here are several methods that are frequently used:

- Pearson's Correlation: Evaluates how closely attributes are related to the target variable linearly.

- Chi-square Test: Determines whether categorical features & the target variable are independent.

- Information Gain: Based on a feature, this metric measures how much entropy or uncertainty has been removed from the target variable.

- Variance Threshold: Discards features having low variance on the grounds that they are unlikely to retain much information.

- Recursive Feature Elimination (RFE): This technique recursively reduces features by training a model and choosing the least significant ones.

- Forward Selection: Begin with an empty set of features & add the most pertinent feature progressively until a stopping requirement is satisfied.

- Backward Elimination: Starts with all features and, in each iteration, eliminates the least important feature until a stopping requirement is satisfied.

- Genetic algorithms: These algorithms use an evolutionary strategy to find the best subset of characteristics based on a fitness function.

- L1 Regularisation (Lasso): This method adds a penalty term to the objective function of the model to promote sparse solutions and feature selection.

- Ridge Regression: This method shrinks the coefficients of unimportant features by applying L2 regularisation to the model.

- Elastic Net strikes a compromise between feature selection and coefficient shrinkage by combining L1 and L2 regularisation.

- Principal Component Analysis (PCA): Maintains the greatest variance while projecting the original features onto a lower-dimensional space.

- Linear Discriminant Analysis (LDA): By projecting features into a lower-dimensional space, LDA maximizes the separation between classes.

- t-Distributed Stochastic Neighbour Embedding (t-SNE): Emphasises local interactions while visualizing high-dimensional data in a lower-dimensional space.

Summary

Feature selection in machine learning algorithms is crucial for locating and choosing pertinent features from a dataset. They deal with the problems of interpretability, overfitting, and high dimensionality. Filter methods, wrapper methods, embedding methods, & dimensionality reduction techniques are some of the various feature selection in machine learning strategies.

Based on statistical measurements like correlation, chi-square tests, information gain, or variance threshold, filter methods assess the importance of a characteristic. Wrapper methods evaluate feature subsets using machine learning models using methods such as recursive feature elimination, forward selection, backward elimination, or evolutionary algorithms. Embedded approaches use regularisation techniques such as L1 regularisation (Lasso), ridge regression, or elastic net to incorporate feature selection throughout the model training process. High-dimensional data is converted into a lower-dimensional representation using dimensionality reduction techniques like PCA, LDA, and t-SNE.

The properties of the dataset, the desired model performance, the demands for interpretability, and the computational resources available all influence the technique choice. The performance, overfitting, and interpretability of machine learning models can all be improved through the use of feature selection strategies, resulting in predictions that are more precise and effective.